Optimized Chips, Systems, and Software: NVIDIA GPUs Excel in Machine Learning, Delivering Unmatched Performance and Efficiency to Millions.

GPUs are the backbone of the burgeoning generative AI landscape, often referred to as the rare Earth metals or gold of this era. Their pivotal role stems from three core technical aspects: parallel processing capabilities, scalability to supercomputing levels, and an extensive software stack tailored for AI applications. These attributes culminate in accelerated computational performance and energy efficiency, outstripping CPUs in AI training, inference, and diverse applications.

Stanford’s Human-Centered AI group highlighted a staggering 7,000-fold performance increase in GPUs since 2003, with a remarkable 5,600-fold surge in the price-performance ratio. Epoch’s analysis reinforced GPUs as the primary platform driving machine learning advancements and noted their central role in recent AI progress.

Furthermore, a 2020 study evaluating AI technology for the U.S. government affirmed GPUs’ cost-effectiveness, estimating them to be significantly superior, by one to three orders of magnitude, compared to leading-node CPUs when considering production and operating expenses. NVIDIA’s GPUs have enhanced AI inference performance by 1,000 times in the last decade, as mentioned by Bill Dally, the company’s chief scientist, during the Hot Chips conference. These developments underscore the indispensable role of NVIDIA GPUs in propelling the evolution of Generative AI.

GPU Dominance in AI: The ChatGPT Benchmark

ChatGPT’s extensive usage, powered by thousands of NVIDIA GPUs, stands as a testament to the prowess of GPUs in the realm of AI. This large language model (LLM), deployed for generative AI services, has catered to over 100 million users since its inception in 2018. MLPerf, the industry benchmark for AI, has consistently showcased the leading performance of NVIDIA GPUs in both AI training and inference, affirming their dominance in the field. Notably, recent advancements like the NVIDIA Grace Hopper Superchips and TensorRT-LLM have exponentially enhanced performance, presenting up to an 8x boost in inference speed and over a 5x reduction in energy consumption and total cost of ownership. NVIDIA GPUs have consistently outperformed CPUs across multiple MLPerf tests, solidifying their stature in AI.

Inside the AI Engine

Delving deeper into the synergy between GPUs and AI, the architecture of AI models resembles intricate mathematical layers akin to a multi-tiered lasagna composed of linear algebra equations. With their thousands of cores operating in parallel, NVIDIA GPUs serve as powerful tools for processing these equations and executing AI models efficiently. The evolution of GPU cores, notably the Tensor Cores, has significantly amplified their processing capabilities for neural networks. These cores, 60x more powerful than their initial designs, now incorporate advanced features like the Transformer Engine, adept at adjusting to precision requirements for transformer models—a fundamental class for generative AI.

Scaling Up Models and Systems

The complexity of AI models is growing exponentially, growing nearly 10x annually. NVIDIA’s GPU systems have adeptly kept pace with this growth, scaling up to supercomputing levels through technologies like NVLink interconnects and Quantum InfiniBand networks. For instance, the DGX GH200, a high-memory AI supercomputer, consolidates up to 256 NVIDIA GH200 Grace Hopper Superchips into a colossal data-center-sized GPU system boasting 144 terabytes of shared memory. The latest NVIDIA H200 Tensor Core GPUs, introduced in November, incorporate up to 288 gigabytes of cutting-edge HBM3e memory technology.

Expanding Software Horizons

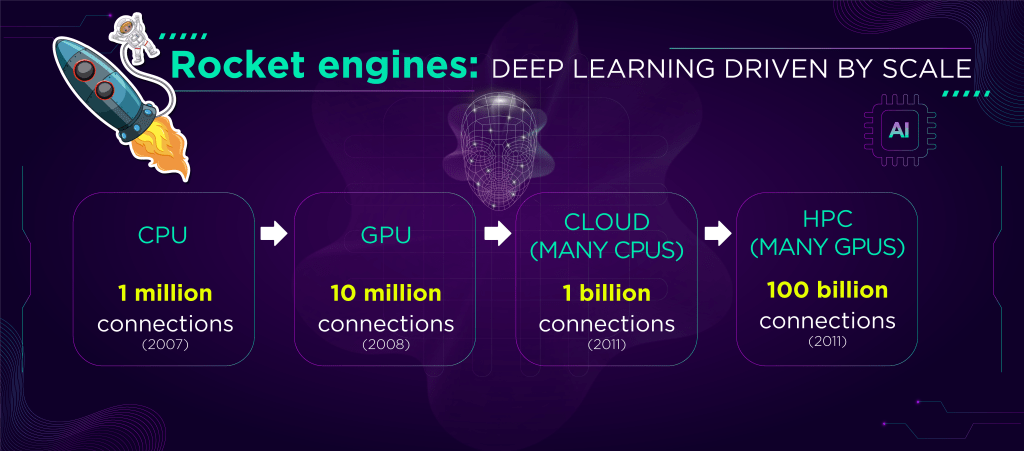

A rich array of GPU software has flourished since 2007, catering to diverse AI facets—from deep-tech features to high-level applications. NVIDIA’s AI platform encompasses hundreds of software libraries and applications, including CUDA programming language and cuDNN-X library for deep learning. NVIDIA NeMo, a user-friendly framework, enables generative AI models’ creation, customization, and inference running. These elements, available as open-source software, have been bundled into the NVIDIA AI Enterprise platform, catering to companies seeking robust security and comprehensive support. Additionally, they are increasingly accessible through significant cloud service providers as APIs and services on NVIDIA DGX Cloud.

Accelerating AI: A 70x Leap

In 2008, AI pioneer Andrew Ng, then a Stanford researcher, showcased a notable success. His team, employing two NVIDIA GeForce GTX 280 GPUs, achieved a 70x speedup compared to CPUs. This leap in processing enabled the completion of an AI model with 100 million parameters, a task previously taking weeks, now accomplished within a single day.

The findings highlighted the immense computational capabilities of modern graphics processors compared to multicore CPUs, signaling a potential revolution in deep unsupervised learning methods. Ng continued to harness GPUs for larger-scale models at Google Brain and Baidu, later contributing to the founding of Coursera, an online education platform teaching numerous AI students.

Ng’s influence extended to renowned figures like Geoff Hinton, considered the father of modern AI. His advocacy for GPU technology as the future of machine learning resonated strongly, encouraging widespread adoption among researchers during talks at NIPS (now NeurIPS) and discussions with Hinton, emphasizing CUDA’s role in constructing extensive neural networks.

Ng’s influence extended to renowned figures like Geoff Hinton, considered the father of modern AI. His advocacy for GPU technology as the future of machine learning resonated strongly, encouraging widespread adoption among researchers during talks at NIPS (now NeurIPS) and discussions with Hinton, emphasizing CUDA’s role in constructing extensive neural networks.

Accelerating Progress with GPUs

Anticipated strides in AI promise extensive impacts on the global economy. A McKinsey report projected that generative AI could contribute between $2.6 trillion to $4.4 trillion annually across 63 examined use cases in banking, healthcare, and retail sectors. Consequently, Stanford’s 2023 AI report highlighted most business leaders intending to augment their AI investments.

Over 40,000 enterprises leverage NVIDIA GPUs for AI and accelerated computing, rallying a global community of 4 million developers. Together, they propel advancements in science, healthcare, finance, and virtually every sector.

NVIDIA’s recent endeavors achieved a remarkable 700,000x acceleration, utilizing AI to combat climate change by mitigating carbon dioxide emissions. This achievement stands among several instances where NVIDIA harnesses GPU performance to transcend AI’s boundaries.

[To share your insights with us, please write to sghosh@martechseries.com]