NVIDIA introduced the NVIDIA Blackwell platform at GTC in March. This platform promises generative AI on trillion-parameter large language models (LLMs) at up to 25x less cost and energy consumption than the NVIDIA Hopper architecture.

What is the NVIDIA Blackwell Platform?

Blackwell has powerful implications for AI workloads, and its technology capabilities can also help to deliver discoveries across all types of scientific computing applications, including traditional numerical simulation.

Accelerated computing and AI drive sustainable computing by reducing energy costs. Many scientific computing applications already benefit from this. Weather can be simulated at 200x lower cost and with 300x less energy, while digital twin simulations have 65x lower cost and 58x less energy consumption versus traditional CPU-based systems and others.

Also Read: Top 10 CIO Influence News of Apr’24

How is Blackwell Boosting Scientific Computing Simulations?

Blackwell GPUs accelerate scientific computing and physics-based simulation by offering 30% more FP64 and FP32 FMA performance than Hopper.

Physics-based simulation is widely used to fine-tune product designs. Simulating products saves researchers and developers billions of dollars, from aircraft and trains to bridges, silicon chips, and medicines.

Traditionally, ASICs are designed mostly on CPUs through a long and complex workflow, with analog analysis determining the voltages and currents.

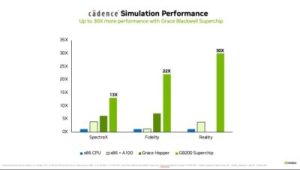

But this is changing. For example, the Cadence SpectreX simulator streamlines analog circuit design. SpectreX circuit simulations could be 13 times faster on a GB200 Grace Blackwell Superchip—which links Blackwell GPUs with Grace CPUs—than on a standard CPU.

Another area is GPU-accelerated computational fluid dynamics, which is fast becoming a mainstream tool. Engineers and equipment designers use it to predict how designs will behave. Cadence Fidelity runs CFD simulations that could be as much as 22 times faster on GB200 systems than on CPU-powered systems. With parallel scalability and 30TB of memory per GB200 NVL72 rack, it becomes possible to capture flow detail in a previously impossible way.

In another example, Cadence Reality’s digital twin software creates a virtual copy of a physical data center, including all its parts—servers, cooling, and power. This virtual model allows engineers to test various configurations and scenarios before they go live, saving time and expense.

The secret behind Cadence Reality’s capabilities is its physics-based algorithms that model how heat, airflow, and power usage affect data centers. It helps engineers and data center operators plan capacity better, anticipate potential operational issues, and make decisions to optimize data center layout and operations for greater efficiency and higher capacity use. Simulations using Blackwell GPUs are estimated to run up to 30 times faster than with CPUs—shrinking timelines and lowering energy use.

Recommended: Top 20 AI Cloud Companies to Know in 2024

#1 Advancing AI for Scientific Computing

Introduction of new Blackwell accelerators and networking ushers in a new era of performance for advanced simulations. The NVIDIA GB200’s architecture features a second-generation transformer engine to accelerate LLM inference workloads.

This brings an unprecedented 30x acceleration on challenging workloads such as the 1.8-trillion-parameter GPT-MoE model over the previous H100 generation. Such progress opens up new possibilities for HPC, allowing LLMs to process vast amounts of scientific data quickly and derive meaningful insights from it, thus allowing scientists to reach breakthroughs faster.

Scientists at Sandia National Laboratories are working on a universal copilot for parallel programming with LLMs. While modern AI is great at generating regular serial computing code, it struggles to generate parallel computing code needed for HPC work. To solve this problem, Sandia’s team attempted to do something ambitious: generate parallel code in Kokkos, a specialized programming language developed by multiple national labs to run tasks across huge processors in some of the world’s fastest supercomputers.

Using an AI technique called retrieval-augmented generation, Sandia blends information-retrieval capabilities with language generation models to build a Kokkos database. The first results are promising: several RAG models show auto-generated Kokko’s code for parallel computing. By overcoming barriers to AI-generated parallel code, Sandia seeks to unlock new possibilities in HPC on the world’s premier supercomputers, from leading-edge renewables research to climate science to drug discovery.

#2 Advancing Quantum Computing

Quantum computing opens doors to transformative breakthroughs in fusion energy, climate research, drug discovery, and various other fields. To expedite progress, researchers are actively simulating future quantum computers on NVIDIA GPU-based systems and software, facilitating the rapid development and testing of quantum algorithms.

The NVIDIA CUDA-Q platform plays a pivotal role in this endeavor by enabling the simulation of quantum computers and developing hybrid applications. With a unified programming model for CPUs, GPUs, and QPUs (quantum processing units), CUDA-Q accelerates the pace of quantum algorithm development.

Also Read: NVIDIA Announces CUDA-Q™ Platform to Advance Quantum Computing

This platform is already driving significant advancements in various domains. For instance, it enhances chemistry workflows at BASF, supports high-energy and nuclear physics research at Stony Brook, and facilitates quantum chemistry simulations at NERSC.

The NVIDIA Blackwell architecture is prepared to improve quantum simulations to unprecedented levels. Leveraging the latest NVIDIA NVLink multi-node interconnect technology ensures faster data transfer, thereby delivering substantial speedup benefits to quantum simulations.