Deploying machine learning (ML) within production systems is a pivotal step, aligning it as a critical component among several in the larger operational framework. While conventional ML education emphasizes learning techniques and model creation pipelines, the comprehensive integration of ML and non-ML components within a system often receives insufficient attention.

Understanding the broader system dynamics becomes paramount for crucial decisions. Considerations revolve around the system’s user interaction and wider environmental impact. Questions about system autonomy based on ML predictions or the necessity of human intervention shape usability, safety, and fairness, significantly impacting system resilience in the face of errors.

This exploration into the relationship between machine learning and the more comprehensive system underlines the significance of a holistic view. Data scientists excel in modeling real-world data, yet successful deployment demands skills akin to software engineering and DevOps. Statistics from VentureBeat and Redapt spotlight the high failure rates of data science projects in reaching production, emphasizing collaborative team efforts as pivotal for success.

The ultimate goal of crafting an ML model is problem-solving, actualized only when the model is operational and actively used. Redapt’s insights highlight the disparity between IT’s stability focus and data scientists’ experimental mindset. Bridging this gap becomes crucial for effective model deployment and sustained performance in production.

While the deployment phase often aligns with software engineering, data scientists empowered with deployment skills gain a competitive edge, particularly in lean organizations. Streamlining tools like TFX, Mlflow, and Kubeflow simplify the deployment process, urging data scientists to adapt to and wield these resources swiftly.

Common Reasons for Machine Learning Project Failures in Production

Machine learning has transcended theoretical concepts, becoming a pervasive technology influencing daily activities—from navigation assistance on Google Maps to personalized content suggestions on Netflix. Despite the widespread utilization of ML, many projects falter before reaching fruition.

According to Gartner’s report, merely 53% of projects transition from prototype to production, an even lower figure in organizations new to AI, potentially reaching a staggering 90% failure rate. While tech giants like Google, Facebook, and Amazon excel in ML deployment, numerous proficient teams struggle to actualize their initiatives. The question arises: why do these failures occur, and how can they be rectified? Identify the four primary challenges confronting modern data teams in scaling ML adoption and offer insights into overcoming these hurdles.

Misalignment between Business Needs and ML Objectives

- Strategic Clarity: Initiating with a clear problem statement rather than a preconceived solution.

- Defined Success Metrics: Establishing measurable success criteria for ML projects to avoid protracted experimentation phases without achievable outcomes.

- Realistic Expectations: Acknowledging that ML isn’t flawless and may not attain machine-like precision, often merely achieving parity with human performance, represents a significant achievement.

Model Training Challenges

- Data Quality and Representativeness: Emphasizing clean, sizable, and representative training datasets essential for generalizable model performance.

- Overfitting and Underfitting: Addressing models becoming overly tailored to training data (overfitting) or inadequately trained (underfitting), impacting model accuracy in real-world scenarios.

Testing and Validation Complexity

- Data Dynamics and Model Performance: Challenges arise in merging multiple data streams and updating models, potentially leading to overfitting or underfitting.

- Unforeseen Model Behavior: Even within proficient ML environments like Google, unexpected model behaviors can emerge during testing and validation.

Deployment and Operational Challenges

- Diverse Deployment Needs: Varied hosting and deployment requirements across ML projects demand various deployment strategies.

- Resource Allocation and Infrastructure: The resource-intensive nature of ML projects necessitates cross-functional alignment on deployment priorities and infrastructural needs.

Best Practices for Deploying Machine Learning Models in Production

1. Data Assessment

Before deploying machine learning models, a critical starting point is assessing data feasibility. This involves scrutinizing whether the available datasets effectively align with the requirements for running machine learning models. Questions arise around the adequacy and timeliness of data for predictive analysis.

For instance, within the Quick-Service Restaurants (QSRs) domain, access to extensive customer data holds immense potential for machine-learning applications. The sheer volume of registered customer data provides a robust foundation for ML models.

Once the data risks are addressed, establishing a data lake environment proves pivotal. Such an environment, surpassing traditional data warehouses, streamlines access to diverse data sources, eliminating bureaucratic impediments.

Experimentation with these datasets becomes indispensable to ascertain their information richness, aligning with the envisioned business transformations. Establishing a scalable computing infrastructure for swift data processing is crucial, a fundamental necessity at this stage.

A systematic cataloging approach becomes imperative upon meticulous cleansing, structuring, and processing of diverse datasets. Cataloging ensures future accessibility and utilization of the data, enhancing its long-term value. Furthermore, instituting a robust governance and security system safeguards data sharing across diverse teams, fostering collaboration within a secure framework.

2. Evaluation of the Right Tech Stack

Post-selection of ML models, rigorous manual testing validates their viability. For example, assessing whether promotional emails drive new conversions is pivotal to fine-tuning strategies in personalized email marketing.

Subsequently, selecting the apt technology stack is paramount. Empowering data science teams to choose from varied technology stacks encourages experimentation, aiding in identifying the one that optimizes ML production processes. The technology stack selected must undergo benchmarking, considering factors like stability, alignment with business use cases, scalability for future demands, and cloud compatibility.

3. Robust Deployment Approach

Establishing standardized deployment processes facilitates seamless testing and integration across various checkpoints, ensuring operational efficiency.

Data engineers are pivotal in refining the codebase, integrating models (as API endpoints or bulk processes), and crafting workflow automation such as an agile ML pipeline architecture for effortless team integration.

A conducive environment, encompassing appropriate datasets and models, stands as the cornerstone of any successful ML model deployment.

4. Post Post-deployment support and testing

Implementing robust frameworks for logging, monitoring, and reporting results streamlines the otherwise intricate testing procedures.

Real-time testing and close monitoring of the ML environment are essential. In a sophisticated experimentation setup, feedback loops deliver test results to data engineering teams, facilitating model updates.

Vigilance against adverse or erroneous outcomes is crucial. Adherence to Service Level Agreements (SLAs), continuous data quality evaluation, and model performance monitoring are indispensable components of this phase, stabilizing the production environment gradually.

5. Change Management & Communication

Clear and transparent communication across cross-functional teams proves instrumental in ensuring the success of ML models, mitigating risks proactively.

Collaboration between data engineering and data science teams becomes imperative during the model deployment phase. Granting data scientists control over the system facilitates code reviews and real-time monitoring of production outcomes. Additionally, training teams for new environments foster adaptability and efficiency.

Post-Production Considerations

-

Continuous Architectural Enhancements

- Ongoing Maintenance: Despite successful deployment, continual efforts are required to refine production architectures.

- Gap Filling and System Updates: Address data gaps, monitor workflow intricacies, and implement regular system updates to ensure sustained operational efficiency.

-

Program Reusability

- Robust Reusable Frameworks: Opt for reusable programs during data preparation and training for scalability and resilience.

- Python File Adoption: Consider utilizing Python files for enhanced manageability and scalability, fostering improved work quality within repeatable pipelines.

- Code-Based Machine Learning Environment: Treat the ML environment as code, enabling the execution of end-to-end pipelines during significant events, ensuring repeatability.

-

Data Vigilance and Monitoring

- Continuous Data Focus: Maintain vigilance over input data, recognizing its pivotal role in model accuracy and performance.

- Robust Monitoring Systems: Implement monitoring systems to manage and track datasets, inputs, predictions, and potential changes in connected labels, ensuring data integrity.

-

Automation Integration

- Leveraging Automation: Recognize the significance of automation in expediting time-consuming tasks, freeing resources for critical areas like model evaluation and feature engineering.

- Streamlined Software Development: Automate repetitive tasks to streamline software development processes and allocate focus to crucial development stages.

-

Governance and Team Collaboration

- Enhanced Governance at Scale: As the team size expands in production, implement robust governance frameworks to manage distributed work and expedite deliveries.

- Centralized Team Hub: Create centralized hubs facilitating seamless connectivity and access to essential resources for the entire team, ensuring smooth operations and easy maintenance.

- Organizational Efficiency: Organize and manage the machine learning experiment meticulously, recognizing it as an independent and critical task within the broader operational context.

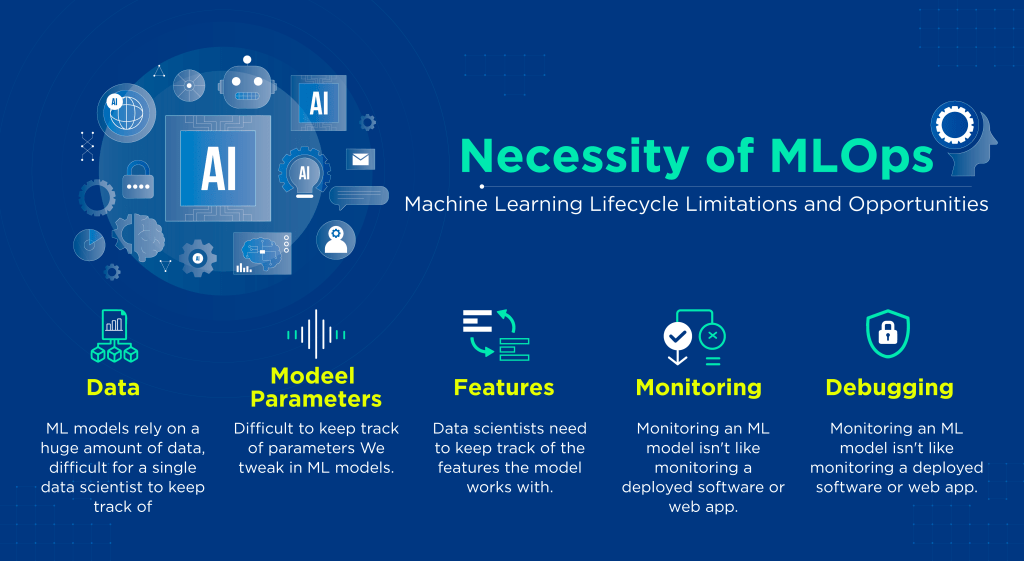

Significance of MLOps in Machine Learning Deployment

Implementing MLOps is crucial for several reasons integral to the success of machine learning deployments. Managing extensive data volumes, parameters, and feature engineering complexities is challenging for data scientists and engineers.

Monitoring and debugging ML models in production differ significantly from traditional software applications like web apps due to their reliance on real-world data. Tracking model performance amidst evolving real-world data necessitates constant monitoring and periodic model updates.

Monitoring and debugging ML models in production differ significantly from traditional software applications like web apps due to their reliance on real-world data. Tracking model performance amidst evolving real-world data necessitates constant monitoring and periodic model updates.

Before deploying ML models, key considerations involve MLOps capabilities, open source integration, machine learning pipelines, and leveraging MLflow.

- MLOps Capabilities

- Define reusable steps encompassing data preparation, training, and scoring processes, facilitating frequent model updates and seamless integration with other AI services.

- Open Source Integration

- Utilize open-source training frameworks, interpretable model frameworks, and deployment tools to accelerate ML solutions and ensure model fairness.

- Machine Learning Pipelines

- Develop pipelines for data preparation, training configuration, validation, and deployment, ensuring efficiency through specific data subsets, diverse hardware, distributed processing, and version-controlled deployment.

- MLflow

- Utilize MLflow, an open-source platform comprising crucial components for end-to-end ML lifecycle management, including experiment tracking, model versioning, deployment, and model serving.

Conclusion

Successfully deploying an ML model into production doesn’t need to be a daunting task if the essential factors are addressed before commencing the project. Prioritizing these considerations is paramount for the success of any ML initiative.

While this discussion may not encompass every aspect, it aims to serve as a foundational guide, offering insights into the fundamental approach required for an ML project’s deployment into production. Adopting these principles can significantly enhance the prospects of achieving successful and impactful results within machine learning deployment.

FAQs

1. What challenges are commonly faced when transitioning ML models to production?

Challenges include aligning ML objectives with business needs, ensuring model training accuracy, testing and validating models, deploying models effectively, and post-deployment support.

2. Why do many ML projects fail to reach production, and how can these failures be rectified?

Failures often occur due to misalignment between business needs and ML objectives, issues with model training, testing complexities, and challenges in deploying and maintaining models. Rectification involves setting clear objectives, ensuring data quality, rigorous testing, and effective deployment strategies.

3. What are the best practices for deploying ML models in production?

Best practices include assessing data feasibility, selecting the right tech stack, adopting a robust deployment approach, providing post-deployment support and testing, implementing change management and communication strategies, focusing on continuous architectural enhancements, and leveraging MLOps.

4. How crucial is data assessment before deploying ML models?

Data assessment is critical as it involves scrutinizing available datasets to ensure they align with model requirements. This step includes assessing data quality, establishing scalable computing infrastructure, cataloging data, and instituting robust governance and security.

[To share your insights with us, please write to sghosh@martechseries.com]