In the fast-moving technological world, integrating data and artificial intelligence (AI) has become a key driver of business transformation. AI’s ability to optimize operations and enhance customer experiences underscores the necessity for technology leaders to grasp the fundamental components that power these innovations: the Central Processing Unit (CPU), Graphics Processing Unit (GPU), and Neural Processing Unit (NPU).

CPUs, GPUs, and NPUs each play distinct roles in computing environments, significantly impacting performance and cost. Understanding these differences is essential for selecting the right hardware to support AI systems and optimize technological investments.

- Central Processing Unit (CPU): As the core component of any computer system, the CPU handles general-purpose processing tasks. It executes instructions, processes data, and drives overall system functionality. The CPU’s architecture, clock speed, and cache size are critical factors that determine its efficiency in multitasking and running various applications.

- Graphics Processing Unit (GPU): Initially designed for rendering graphics, GPUs are now pivotal in accelerating AI and machine learning tasks. Their ability to handle parallel processing makes them suitable for complex computations and large-scale data processing. GPUs excel in scenarios requiring high-performance computing and extensive data analysis.

- Neural Processing Unit (NPU): NPUs are specialized processors optimized for AI inference tasks. They are designed to efficiently execute neural network algorithms, making them ideal for applications requiring real-time decision-making and edge computing. NPUs offer significant advantages in scenarios involving deep learning and advanced AI models.

Understanding the differences, architecture and cases is important for selecting the appropriate processor. The selection process involves evaluating these components’ architectural strengths and aligning them with specific business needs. A deep understanding of each processor’s role enables technology leaders to make informed decisions, ensuring that their AI infrastructure is both cost-effective and high-performing.

As businesses continue to leverage data-driven AI, choosing the right processor—whether CPU, GPU, or NPU—becomes crucial for maintaining competitive advantage and meeting modern computing standards.

This article will explore the role of each processing unit, review the latest devices, and provide a comparative guide to facilitate a comprehensive understanding of CPUs, GPUs, and NPUs.

Also Read: The Cloud + Remote Access Platforms = Your Key for Securing Generative AI

2. CPU (Central Processing Unit): The Traditional Workhorse

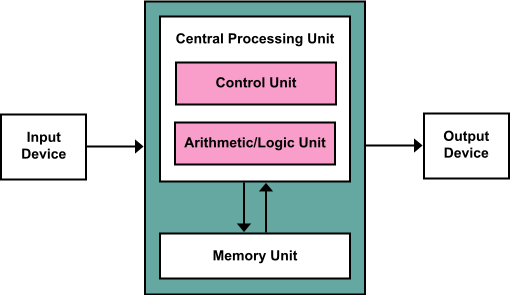

Gartner Defines the CPU as the component of a computer system that controls the interpretation and execution of instructions. The CPU of a PC consists of a single microprocessor, while the CPU of a more powerful mainframe consists of multiple processing devices, and in some cases, hundreds of them. The term “processor” is often used to refer to a CPU.

The Central Processing Unit (CPU) is a critical component of a computer system, responsible for executing data input/output, processing, and storage functions. The CPU, installed in a socket on the motherboard, handles various data processing tasks and stores data, instructions, programs, and intermediate results.

How CPUs Operate: Understanding the Instruction Cycle

CPUs function through a repeated command cycle managed by the control unit in conjunction with the computer clock, which provides synchronization.

The CPU operates based on a defined sequence known as the CPU instruction cycle. This cycle determines how many times basic computing instructions are repeated, depending on the system’s processing power.

The CPU instruction cycle comprises three fundamental operations:

- Fetch: Retrieves data from memory.

- Decode: Translates binary instructions into electrical signals that interact with other CPU components.

- Execute: Interprets and carries out the instructions of a computer program.

To enhance processing speeds, some users bypass standard methods of performance optimization, such as adding more memory cores. Instead, they engage in “overclocking,” which involves adjusting the computer clock to increase speed. This practice, akin to “jailbreaking” a smartphone, can boost performance but may also harm the device and is generally discouraged by manufacturers.

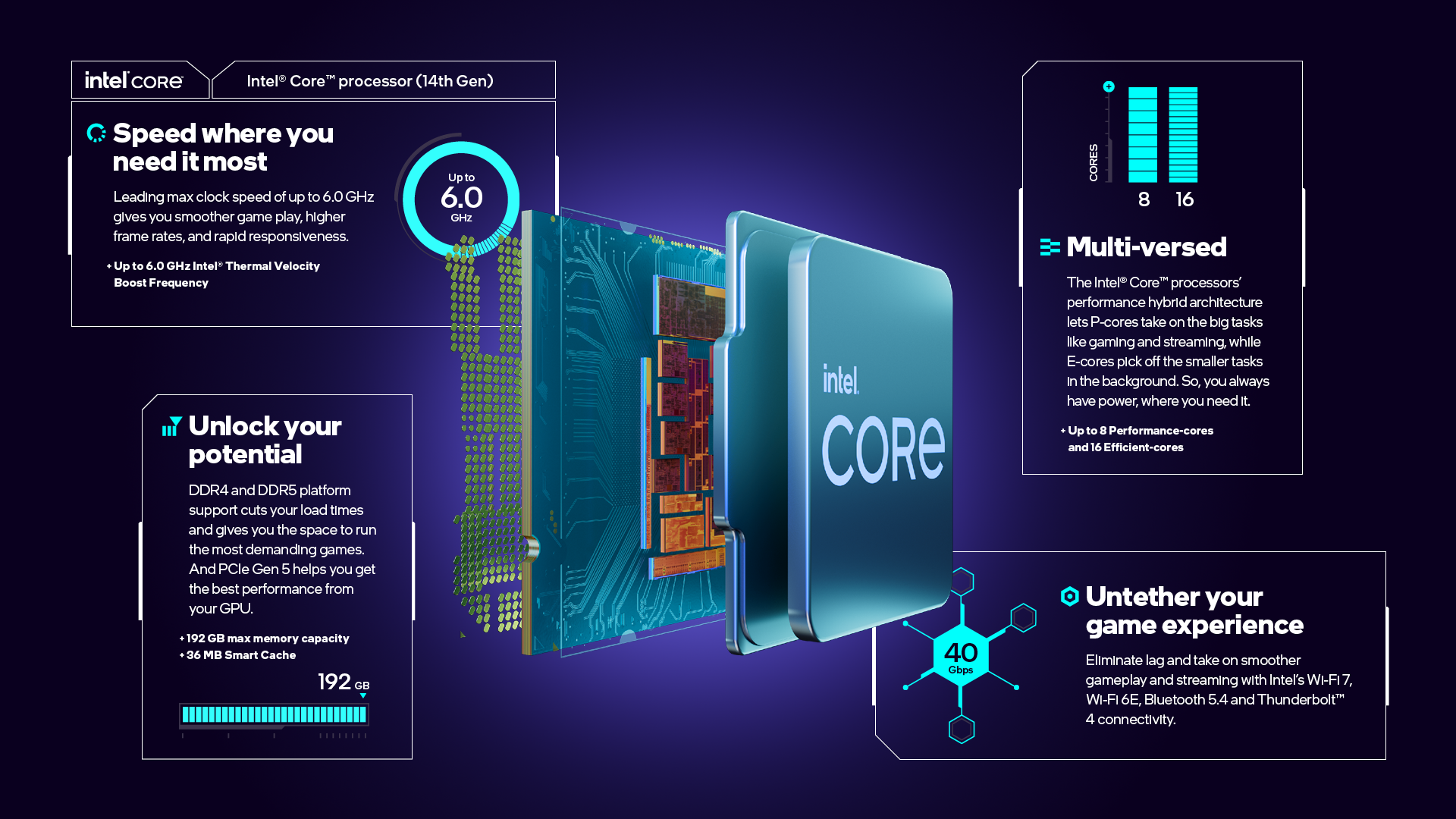

Top manufacturers and the CPUs they make

- Intel – Through its four product lines Intel markets processors and microprocessors. It’s exclusive line is Intel Core. Intel’s Xeon processors. These processors are targeted toward offices and businesses. In addition, Intel’s Celeron and Intel Pentium lines are considered slower and less powerful than the Core line.

- Advanced Micro Devices (AMD): AMD offers two product types of processors and microprocessors through including, CPUs and APUs. APUs are CPUs that have been equipped with proprietary Radeon graphics. AMD produces high-speed, high-performance microprocessors called Ryzen, which are aimed at the video game industry.

Examples of CPU devices

-

- Intel i-series: A common CPU found in many desktop and laptop computers. The Core series includes the i3, i5, and i7 models. The i5 is a good choice for users who need more than just web browsing and email, but don’t need top-of-the-line power.

- Intel i-series: A common CPU found in many desktop and laptop computers. The Core series includes the i3, i5, and i7 models. The i5 is a good choice for users who need more than just web browsing and email, but don’t need top-of-the-line power.

- Qualcomm Snapdragon: A common CPU found in smartphones and tablets.

-

- Intel Core i9: A high-performance processor from Intel.

- Intel Core i9: A high-performance processor from Intel.

- ARM, DEC Alpha, PA-RISC, SPARC, MIPS, and IBM’s PowerPC: These are some common RISC microprocessors. RISC stands for reduced instruction set computer, and this design philosophy uses a smaller, simpler set of instructions

Use Cases for CPU

CPUs are specialized for certain AI workloads, particularly those that are not well-suited for parallel processing. Typical use cases include:

- Real-time inference and machine learning algorithms that do not easily parallelize

- Recurrent neural networks that rely on sequential data

- Recommender systems with high memory demands for embedding layers

- Models utilizing large-scale data samples, such as 3D data for inference and training

While CPUs are effective for tasks requiring sequential processing or complex statistical computations, their use in modern AI is limited. Most companies prefer the efficiency and speed of GPUs for AI tasks. However, some data scientists continue to develop AI algorithms using CPUs, focusing on serial processing rather than parallel approaches.

Also Read: CIO Influence Interview with Kendra DeKeyrel, Vice President of ESG and Asset Management at IBM

3. GPU (Graphics Processing Unit): The Parallel Processing Powerhouse

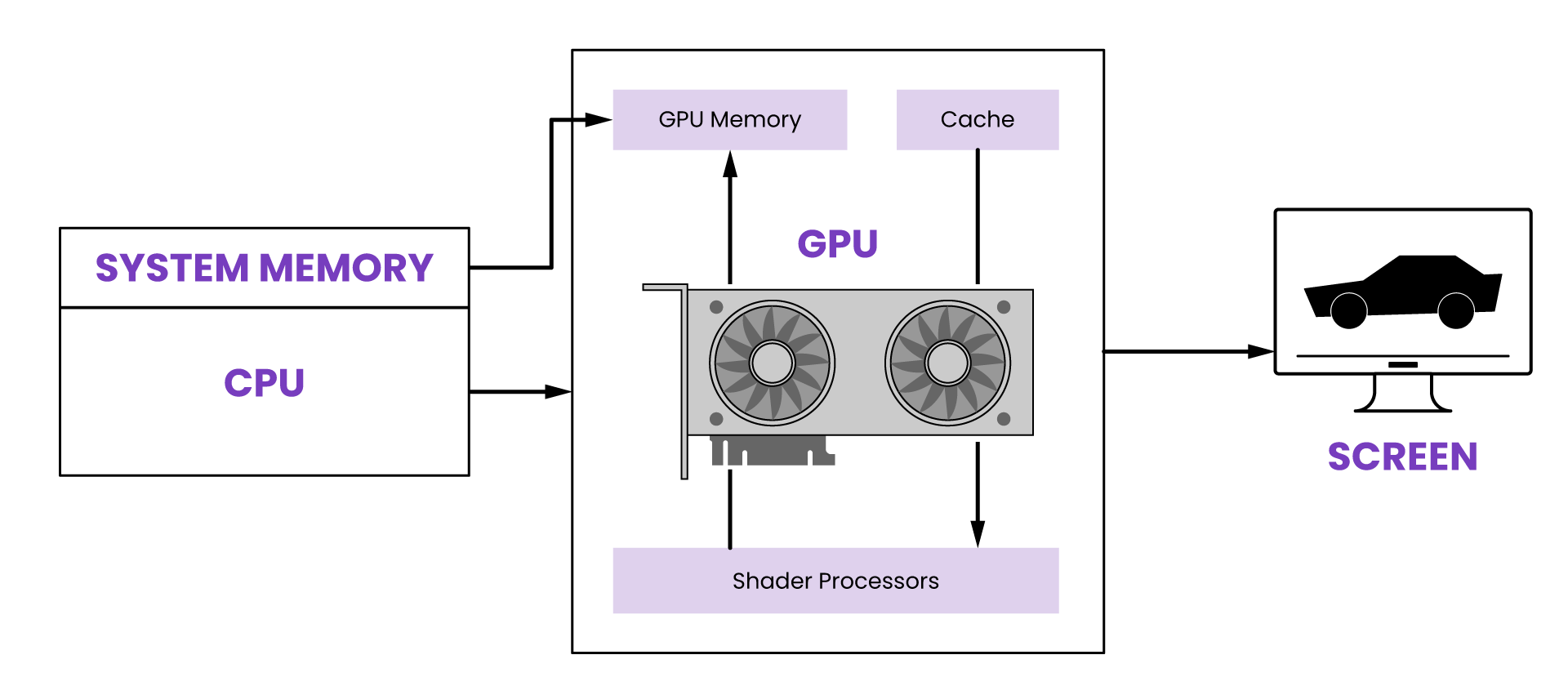

The Graphics Processing Unit (GPU) has become a critical component of modern computing, both for personal and business use. Designed for parallel processing, GPUs are employed in various applications, including graphics and video rendering. While initially known for their gaming capabilities, GPUs are increasingly used in creative production and artificial intelligence (AI).

Originally developed to accelerate 3D graphics rendering, GPUs have evolved to become more programmable and versatile. This flexibility enables graphics developers to create advanced visual effects with realistic lighting and shadowing. Additionally, GPUs are now widely used to accelerate workloads in high-performance computing (HPC) and deep learning.

How do GPUs Operate?

Modern GPUs consist of multiple multiprocessors, each containing a shared memory block, several processors, and corresponding registers. The GPU itself is equipped with constant memory and device memory on its housing board.

The functionality of a GPU varies depending on its purpose, manufacturer, chip specifications, and the software managing it. For example, Nvidia’s CUDA parallel processing platform allows developers to program the GPU for general-purpose parallel processing tasks.

GPUs come in two main forms: discrete and integrated.

Discrete GPUs

Discrete GPUs are standalone chips dedicated to specific tasks. Initially designed for graphics rendering, these GPUs are now employed in machine learning (ML) and complex simulations. Typically located on a graphics card that slots into the motherboard, discrete GPUs can also be placed on other dedicated cards depending on the task.

Integrated GPUs

In the early 2010s, integrated GPUs (iGPUs) became more prevalent. These combine the CPU and GPU on a single chip. Early examples include Intel’s Celeron, Pentium, and Core lines, which are still widely used in laptops and PCs. Another form of iGPU is the system-on-a-chip (SoC), which integrates the CPU, GPU, memory, and networking components, commonly found in smartphones.

Virtual GPUs

GPUs can also be virtualized, allowing for software-based instances that share space on cloud servers. Virtual GPUs enable workload processing without the need for direct hardware management or maintenance.

Top Manufacturers of GPUs and its Technologies

Intel Corporation

Intel has been a pioneer in graphics processing for PCs. The latest Intel Arc™ A-series graphics introduce advanced features to desktops and laptops, including machine learning capabilities, graphics acceleration, and ray tracing.

Integrated into 13th Gen Intel Core processors, Intel Iris Xe and Intel UHD Graphics deliver 4K HDR, 1080p gaming, and enhanced visual experiences for desktops. For laptops, Intel also offers Intel Iris Xe MAX graphics for high-quality performance.

Intel Data Center GPU helps in maximizing th e impact and accelerating media, AI, and high-performance computing (HPC) workloads with Intel’s full suite of Intel Data Center GPUs.

e impact and accelerating media, AI, and high-performance computing (HPC) workloads with Intel’s full suite of Intel Data Center GPUs.

Advanced Micro Devices, Inc. (AMD)

Advanced Micro Devices (AMD) is a semiconductor company that designs and manufactures graphics processing units (GPUs) and other computer technologies. AMD’s GPU technologies include:

AMD RDNA architecture: This architecture is designed for high-performance gaming and is featured in AMD’s Radeon RX 7000 Series graphics cards. AMD RDNA 3 is the latest version of this architecture and features AI accelerators and a chiplet GPU design.

AMD RDNA architecture: This architecture is designed for high-performance gaming and is featured in AMD’s Radeon RX 7000 Series graphics cards. AMD RDNA 3 is the latest version of this architecture and features AI accelerators and a chiplet GPU design.

AMD Radeon PRO Graphics: This series of GPUs is designed for professionals, artists, and creators. It features up to 68 billion colors, 8K 165 Hz, and full coverage of the REC2020 color space.

AMD Radeon PRO Graphics: This series of GPUs is designed for professionals, artists, and creators. It features up to 68 billion colors, 8K 165 Hz, and full coverage of the REC2020 color space.

AMD Smart Technologies: These technologies enhance system performance and efficiency by directing power where it’s needed. They also leverage the synergies between AMD Ryzen CPUs and AMD Radeon GPUs to improve game performance and reduce latency.

AMD Alveo Adaptive Accelerators: These accelerators are designed for AI inference efficiency and are tuned for natural language processing and video analytics applications.

NVIDIA

NVIDIA is a leading technology company renowned for its groundbreaking work in Graphics Processing Units (GPUs).

Key GPU Technologies from NVIDIA

CUDA: NVIDIA’s CUDA (Compute Unified Device Architecture) platform provides a programming model for developers to harness the power of GPUs for general-purpose computing tasks.

Tensor Cores: These specialized hardware units within NVIDIA GPUs are optimized for matrix operations, making them particularly efficient for training and inference in deep neural networks.

DLSS (Deep Learning Super Sampling): DLSS uses AI to render higher-quality images at lower resolutions, improving performance without sacrificing visual quality.

RTX (Ray Tracing Technology): RTX enables real-time ray tracing, creating more realistic lighting and reflections in games and other applications.

/cdn.vox-cdn.com/uploads/chorus_asset/file/12321273/nvidia-geforce-rtx-2.0.png)

Max-Q Design: NVIDIA’s Max-Q design focuses on creating powerful, yet thin and quiet gaming laptops by optimizing power consumption and thermal efficiency.

4. NPU (Neural Processing Unit): The AI-Driven Specialist

How Does a Neural Processing Unit Work?

Neural Processing Units (NPUs) are specifically engineered to handle machine learning algorithms. While GPUs excel at processing parallel data, NPUs are designed to perform the complex computations required for neural networks that drive AI and ML processes.

Machine learning algorithms form the core of AI applications. As neural networks and their computations have grown more intricate, the need for specialized hardware has become apparent.

NPUs accelerate deep learning tasks by directly executing the specific operations required by neural networks. Unlike general processors, which need to build and manage the computational framework, NPUs are purpose-built to efficiently handle AI/ML operations.

The high-performance capabilities of NPUs significantly enhance AI performance. They are particularly effective at matrix multiplications and convolutions, which are essential for AI processes. NPUs are currently transforming industries by improving image recognition and language processing, delivering faster inference times and lower power consumption, thus positively impacting organizational efficiency and cost-effectiveness.

Also Read: How Serverless Computing is Shaping the Future of Infrastructure As A Service

Top Manufacturers and its NPU Technologies

Qualcomm’s NPU Technologies

Qualcomm has made significant strides in Neural Processing Unit (NPU) technology, evolving from the Hexagon DSP to the advanced Hexagon NPU. The company has consistently invested in incremental improvements across generations, culminating in an NPU capable of delivering 45 TOPS (Tera Operations Per Second) of AI performance.

Qualcomm’s journey in AI began as early as 2017, when the company first highlighted AI capabilities within the Hexagon DSP. This early focus included leveraging the DSP alongside GPUs for enhanced AI workloads. Although performance claims for the Hexagon 682 in the Snapdragon 835 SoC, released in 2017, were not specified, subsequent advancements were noteworthy.

In 2018, the Snapdragon 845 featured the Hexagon 685, which achieved 3 TOPS thanks to the introduction of HVX (Hexagon Vector Extensions). By 2019, Qualcomm had integrated the Hexagon 698 into the Snapdragon 865, rebranding it as a fifth-generation “AI engine.” This marked a significant evolution, with the Snapdragon 8 Gen 3 and Snapdragon X Elite representing Qualcomm’s ninth-generation AI engines. These advancements underscore Qualcomm’s commitment to advancing NPU technology, delivering substantial improvements in AI performance and capabilities.

Intel’s NPU Technology

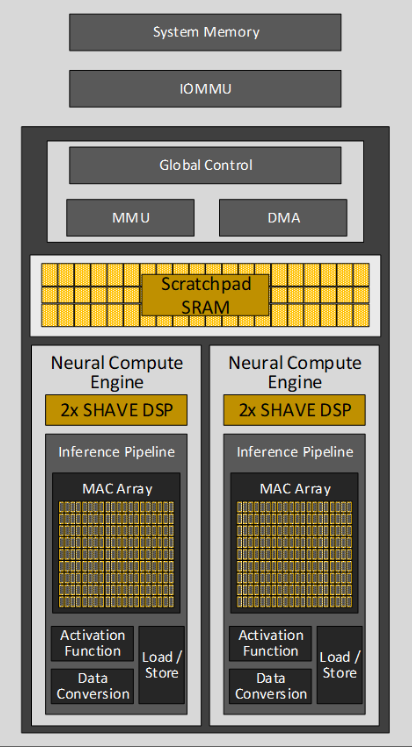

Intel’s Neural Processing Unit (NPU) is an advanced AI accelerator integrated into Intel Core Ultra processors. It features a distinctive architecture that combines compute acceleration with sophisticated data transfer capabilities.

Scalable Multi-Tile Design

At the core of the NPU’s compute acceleration is its scalable, tiled architecture known as Neural Compute Engines. This design supports high-performance AI processing through a distributed approach.

Hardware Acceleration Blocks

The Neural Compute Engines are equipped with specialized hardware acceleration blocks. These blocks are optimized for computationally intensive AI operations such as Matrix Multiplication and Convolution, providing enhanced performance for these tasks.

Streaming Hybrid Architecture

In addition to dedicated AI operation units, the NPUs incorporate Streaming Hybrid Architecture Vector Engines (SHAVE). This hybrid architecture enables efficient parallel computing, addressing general computational needs and broadening the NPU’s application range.

DMA Engines

Direct Memory Access (DMA) engines are a critical component of the NPU. They ensure efficient data transfer between system memory (DRAM) and software-managed caches, optimizing overall data movement.

Memory Management

The NPU includes a built-in device Memory Management Unit (MMU) and an I/O Memory Management Unit (IOMMU). These components support multiple concurrent hardware contexts, ensuring security isolation between contexts in compliance with the Microsoft Compute Driver Model (MCDM) architectural standards.

5. Key Differences: Breaking Down the Comparison

-

Processing Architectures

CPU |

Architecture: CPUs are designed for general-purpose computing with a few powerful cores optimized for sequential task execution.

|

|

Characteristics: Typically have 4 to 64 cores, and each core is capable of handling a variety of instructions and tasks, making them versatile for a wide range of applications.

|

|

GPU |

Architecture: GPUs are designed for parallel processing with many smaller, more efficient cores. They excel at handling multiple tasks simultaneously.

|

|

Characteristics: Have thousands of smaller cores, optimized for processing large blocks of data in parallel, such as rendering graphics or performing complex mathematical computations.

|

|

NPU |

Architecture: NPUs are specialized hardware designed specifically for accelerating neural network computations and machine learning tasks.NPUs are specialized hardware designed specifically for accelerating neural network computations and machine learning tasks.

|

|

Characteristics: Highly optimized for matrix operations and other computations common in AI and machine learning, often featuring custom architectures for high throughput and low latency.

|

-

Power and Efficiency

CPU |

Power Consumption: Generally consumes more power per core compared to GPUs and NPUs because they are optimized for versatility and complex instruction sets.

|

|

Efficiency: Lower efficiency in handling large-scale parallel tasks, but highly efficient in executing sequential tasks.

|

|

GPU |

Power Consumption: Higher power consumption due to the large number of cores, but this is balanced by their ability to perform many calculations in parallel.

|

|

Efficiency: More efficient than CPUs for tasks involving large-scale parallel processing, such as rendering and complex simulations.

|

|

NPU |

Power Consumption: Designed for high efficiency with respect to the specific tasks they perform, often consuming less power for AI-related computations compared to general-purpose CPUs and GPUs.

|

|

Efficiency: Highly efficient for neural network tasks and AI computations due to custom architecture and optimizations.

|

-

Task Optimization

CPU |

Optimized for a broad range of tasks including operating systems, software applications, and general-purpose computing. Best for tasks requiring high single-threaded performance and complex branching.

|

GPU |

Optimized for parallel processing tasks such as graphics rendering, scientific simulations, and data analysis. Performs exceptionally well with algorithms that can be parallelized.

|

NPU |

Optimized specifically for neural network workloads, including training and inference tasks in machine learning. Provides superior performance and efficiency for AI applications.

|

-

Performance

CPU |

Provides high performance for tasks requiring complex computations and decision-making. Less effective for parallel tasks compared to GPUs and NPUs.

|

GPU |

Delivers exceptional performance for tasks requiring parallel processing, such as high-definition gaming, video rendering, and large-scale data computations.

|

NPU |

Superior performance in AI and machine learning tasks due to specialized hardware. Can outperform CPUs and GPUs in neural network computations and deep learning applications.

|

6. The Future of Enterprise Computing

The future of enterprise computing will increasingly integrate CPUs, GPUs, and NPUs, each specializing in tasks suited to their unique architectures. This triad of processing units will enable more efficient and powerful processing across a range of workloads, reflecting a shift toward more specialized hardware solutions.

Neural Processing Units (NPUs) will play a pivotal role as the reliance on artificial intelligence (AI) continues to grow. As AI technologies and machine learning models become more complex, there will be a heightened demand for NPUs. These units are expected to evolve with increased processing power and specialized functionalities tailored to specific AI tasks. The proliferation of NPUs in everyday devices, such as mobile phones and personal computers, will reflect their growing importance in handling sophisticated AI computations.

Central Processing Units (CPUs) will also see significant advancements. Future developments are likely to include the adoption of energy-efficient materials to replace current high-energy-consuming CPUs. Artificial intelligence will be employed to enhance CPU adaptability, optimizing performance based on the most frequent tasks. Moreover, advancements in quantum computing may enable CPUs to process more complex queries at unprecedented speeds, further expanding their capabilities.

Graphics Processing Units (GPUs) are poised for a transformative evolution. Innovations in GPU architecture will focus on increasing core counts, improving memory bandwidth, and introducing specialized features for distinct workloads. These advancements will enhance GPU performance in areas such as data analysis, high-definition rendering, and complex simulations.

In conclusion, the future of enterprise computing will be characterized by a synergy between CPUs, GPUs, and NPUs. Each will address specific needs, driving more efficient and powerful computing solutions across various domains.

[To share your insights with us as part of editorial or sponsored content, please write to psen@itechseries.com]