Google unveils its latest advancement in artificial intelligence with the introduction of Gemini 1.5, a next-generation model poised to revolutionize the field. Led by CEO Sundar Pichai, Google’s commitment to innovation and safety drives the development of Gemini 1.5, offering dramatic improvements in performance and efficiency. With an extended context window capable of processing up to 1 million tokens, Gemini 1.5 promises to empower developers and enterprises with enhanced capabilities for building more sophisticated AI applications.

Sundar Pichai, CEO of Google, remarked,

Sundar Pichai, CEO of Google, remarked,

“Last week, we rolled out our most capable model, Gemini 1.0 Ultra, and took a significant step forward in making Google products more helpful, starting with Gemini Advanced. Today, developers and Cloud customers can begin building with 1.0 Ultra too — with our Gemini API in AI Studio and in Vertex AI.

Our teams continue pushing the frontiers of our latest models with safety at the core. They are making rapid progress. In fact, we’re ready to introduce the next generation: Gemini 1.5. It shows dramatic improvements across a number of dimensions and 1.5 Pro achieves comparable quality to 1.0 Ultra, while using less compute.

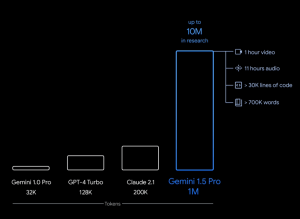

This new generation also delivers a breakthrough in long-context understanding. We’ve been able to significantly increase the amount of information our models can process — running up to 1 million tokens consistently, achieving the longest context window of any large-scale foundation model yet.

Longer context windows show us the promise of what is possible. They will enable entirely new capabilities and help developers build much more useful models and applications. We’re excited to offer a limited preview of this experimental feature to developers and enterprise customers. Demis shares more on capabilities, safety, and availability below.”

Advanced Features of Gemini 1.5

Demis Hassabis, representing the Gemini team, heralds a new era in artificial intelligence as Google unveils Gemini 1.5. With advancements poised to enhance AI’s utility for billions worldwide, the journey from Gemini 1.0 to 1.5 has been marked by rigorous testing and relentless refinement.

1. Highly Efficient Architecture

At the core of Gemini 1.5 lies a highly efficient Mixture-of-Experts (MoE) architecture, leveraging Google’s extensive research on Transformer and MoE models. By dividing the neural network into smaller “expert” pathways, Gemini 1.5 optimizes efficiency while maintaining exceptional performance. This innovative approach represents a paradigm shift in AI model development, enabling quicker learning and enhanced quality.

2. Greater Context, More Helpful Capabilities

Gemini 1.5 boasts an extended context window, revolutionizing the depth and scope of information processing. With the capacity to analyze up to 1 million tokens, Gemini 1.5 Pro transcends previous limitations, empowering developers and enterprises to tackle complex tasks with unprecedented accuracy and relevance. From analyzing extensive documents to comprehending intricate codebases, Gemini 1.5 Pro excels in complex reasoning tasks, unlocking new possibilities for AI applications.

3. Complex Reasoning and Problem-Solving

The advanced capabilities of Gemini 1.5 Pro extend to diverse modalities, including text, video, and code. Equipped with sophisticated understanding and reasoning abilities, Gemini 1.5 Pro seamlessly analyzes, classifies, and summarizes large amounts of content within a given prompt. Whether deciphering the intricacies of a historical transcript or unraveling the nuances of a silent film, Gemini 1.5 Pro demonstrates unparalleled prowess in problem-solving across various domains.

Gemini 1.5 Pro’s ability to process longer blocks of code signifies a significant breakthrough in AI-driven problem-solving. With the capacity to reason across vast codebases, Gemini 1.5 Pro provides valuable insights, suggests modifications, and offers detailed explanations, empowering developers to streamline their workflow and optimize code efficiency.

Performance Excellence of Gemini 1.5 Pro

Gemini 1.5 Pro sets a new standard of performance excellence in the realm of artificial intelligence. Rigorously tested across diverse modalities including text, code, image, audio, and video, Gemini 1.5 Pro surpasses its predecessors on 87% of benchmarks used for developing large language models (LLMs). When compared to Gemini 1.0 Ultra, Gemini 1.5 Pro performs admirably, demonstrating comparable levels of proficiency.

Gemini 1.5 Pro maintains exceptional performance even with the expansion of its context window. In the Needle In A Haystack (NIAH) evaluation, a challenging task where embedded text must be identified within lengthy passages, Gemini 1.5 Pro achieves an impressive success rate of 99%, even in contexts as vast as 1 million tokens.

Furthermore, Gemini 1.5 Pro exhibits remarkable “in-context learning” capabilities, enabling it to acquire new skills seamlessly from extended prompts. Tested on the Machine Translation from One Book (MTOB) benchmark, Gemini 1.5 Pro demonstrates its ability to learn from previously unseen information. For instance, when presented with a grammar manual for Kalamang, a language with limited speakers worldwide, the model achieves translation proficiency comparable to human learners.

Ethics and Safety Testing

Google’s commitment to ethical AI development is evident in our rigorous testing procedures, aligned with the company’s AI Principles and robust safety policies. Through extensive evaluation, our models undergo thorough scrutiny to identify and address potential risks, ensuring the highest standards of safety in AI systems. Research insights gleaned from these tests are integrated into our governance processes and model development, facilitating continuous enhancement of our AI systems.

Continuous Refinement and Safety Measures

Since the inception of Gemini 1.0 Ultra, our teams have remained steadfast in refining the model for safer deployment. Through ongoing research and development efforts, we diligently address safety concerns and utilize red-teaming techniques to proactively assess potential harms. This proactive approach ensures that our models uphold the highest safety standards before they are released to a wider audience.

Responsible Deployment of Gemini 1.5 Pro

In readiness for the launch of Gemini 1.5 Pro, we maintain the same commitment to responsible deployment demonstrated with previous models. Extensive evaluations, including comprehensive assessments of content safety and representational harms, are conducted to ensure strict adherence to ethical guidelines. Moreover, we are actively developing additional tests tailored to the novel long-context capabilities of Gemini 1.5 Pro, reinforcing our dedication to ethical AI practices.

Frequently Asked Questions about Gemini 1.5

1. What is Gemini 1.5?

Gemini 1.5 is the next generation of Google’s large language model (LLM), a significant improvement over the previous version (1.0). It offers dramatic enhancements in performance, efficiency, and context understanding.

2. What are the key features of Gemini 1.5?

- Dramatically improved performance

- Highly efficient architecture

- Better understanding and reasoning across modalities

- Enhanced in-context learning

3. How can I access Gemini 1.5?

A limited preview of Gemini 1.5 Pro (mid-size multimodal model) is available for developers and enterprise customers through AI Studio and Vertex AI. The standard (wider) release will have a 128,000 token context window, with options for scaling up to 1 million tokens later.

4. Is the 1 million token context window available now?

Yes, but only as an experimental feature in a limited preview. It has a longer latency and is free to try during the testing period.

5. How does Gemini 1.5 address safety and ethics?

Google applies extensive testing and adheres to AI Principles to ensure responsible development and deployment. This includes ongoing research on safety risks, red-teaming techniques, and evaluations for content safety and representational harms.

[To share your insights with us as part of editorial or sponsored content, please write to sghosh@martechseries.com]