ChatGPT is decked up as an elixir that can solve every problem in your business. But, is it really true? Recently, ChatGPT was put to an acid test by a leading bug bounty and security services platform for the web3 domain. The results were astonishing when 64% of whitehats surveyed by the company found that ChatGPT provided inconclusive answers in vulnerability discovery. Immunefi published the results on the vulnerability discovery trends in its ChatGPT Security Report.

Here are the top extracts from the report on ChatGPT’s role in vulnerability discovery.

The number of real vulnerabilities reported by ChatGPT — Z.E.R.O.

Since the launch of ChatGPT last year, Immunefi received numerous bug reports generated by the AI tool. Despite their professional context and presentation, the AI-generated bug reports made hysterical claims about the vulnerabilities discovered. According to Immunefi, ChatGPT-generated bug reports could not point out even a single real vulnerability in the industry. This led to the banning of accounts that submitted ChatGPT-generated bug reports without substantial proof behind the claims. Exact count– 21% of accounts that submitted bug reports to Immunefi were banned for using ChatGPT-based figures.

36.7% whitehats use ChatGPT daily as part of their web3 security workflow

ChatGPT is fairly popular among Web3 whitehats. While 36.7% of respondents agreed to a daily ChatGPT usage, another 29.1% said they use the generative AI tool at least once during the week. Only 8.2% of whitehats prefer to stay completely away from GPT usage for bug reporting or discovery.

More on IT and Security: The Rise of OT Cybersecurity Threats

Confidence in ChatGPT is diabolical

ChatGPT is a very useful productivity tool. However, using this AI capability for vulnerability discovery finds a high wall. It can’t replace the manual code review or identify new and emerging threats that haven’t been mentioned anywhere online.

While ChatGPT presents numerous benefits to users across marketing, sales, service, and financial management, its suitability in web3 security is fairly doubtful.

While 75,2% of whitehats believe that ChatGPT can improve web3 research, 52.1% of respondents also raised serious concerns regarding its security and governance. Most whitehats felt that a lack of adequate domain knowledge on generative AI and the difficulty in handling large-sized audits push back their efforts on ChatGPT adoption for vulnerability discovery in web3 security research.

Top ChatGPT use cases in the web3 security domain are…

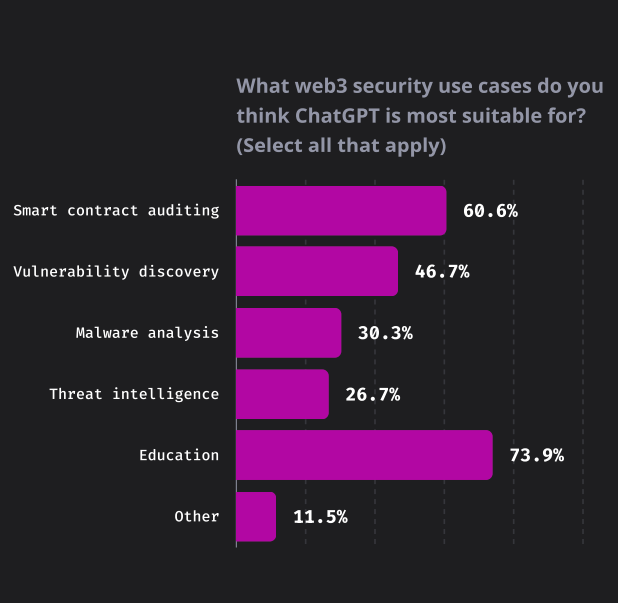

A very high percentage of whitehats believe ChatGPT is a great enabler in the web3 security education department.

60.6% of whitehats find ChatGPT could be suitable in web3 security for smart contract auditing. 46.7% of participants see ChatGPT as a potent tool for vulnerability discovery while others found it useful for malware analysis (30.3%), threat intelligence (26.7%), and so on.

Whitehats are likely to pick ChatGPT for web3 security research only if it could provide an accurate result (60%), or improved the ease of use (55.2%) of AI in threat management.

According to the CEO of Immunefi, ChatGPT technology is just not ready to handle web3 security, particularly vulnerability discovery. The industry should closely scrutinize the feasibility of this AI tool before adding it to its existing security arsenal. However, ChatGPT’s adoption could spike in the near future if developers can fine-tune the technology to meet the specific needs of security professionals. The report highlights the importance of collaboration between AI developers, security professionals, and web3 security policymakers to ensure fair and transparent use across different functions.

So, have you tried generating a vulnerability discovery and threat intelligence report using ChatGPT in 2023?

If yes, please share your assessment with us. We will review the outcomes and publish them on our site based on our editorial guidelines.

[To share your insights with us, please write to sghosh@martechseries.com]