It is well known that Cyber attacks have shattered businesses with devastating losses, trusts, and reputations, and therefore, companies have intrinsic incentive to create a stronger security set-up.

It is also complemented by regulatory mandates stipulating technological and organizational controls to maintain data confidentiality, integrity, availability, and privacy. With increasing exposure to trends in data protection practices, organizations must adopt to zero trust architecture to combat the security complexity and sophistication of the regulatory landscape and increasing legal challenges.

PwC’s 23rd CEO survey reports that Swiss executives are also conscious of this major challenge, rating cyber risks as the third most threatening factor to their companies’ growth prospects. They expect a jumbled and complex regulatory landscape in the coming years. Although there is some awareness of cybersecurity among Swiss enterprises, their capabilities in cybersecurity are insufficient to effectively protect data, detect intrusions in a timely manner, and react adequately to cyber attacks.

Just treating the symptoms of cybersecurity breaches is not enough. True cyber resilience requires a foundational approach rooted in the Zero Trust architecture. This architecture refers to the framework in which information security measures are conceptualized and implemented in a manner aligned with internal goals and external legal requirements.

The IT security architecture covers the complete lifecycle of electronic data, from creation and use to transfer, storage, archiving, and destruction. It starts from the physical and virtual client and server endpoints, IT and business applications, infrastructure, and the connecting network.

Traditionally, security controls were deployed at the network edge. However, the increasing number of internet-connected devices within corporate networks has deprived such an approach. A breached device becomes a Trojan and a way to bypass the perimeter defenses. A paradigm shift is needed: a Zero-Trust model should be adopted.

Also Read: Innovating Security Operations: The Role of Generative AI

Why is Implementing Zero Trust Architecture Important?

The Zero Trust architecture abandons the traditional approach of border and perimeter security in favor of microperimeters based on user access, location, and application hosting models. In this cleanly segmented network, only sensitive data is protected, and all traffic is put through very stringent verification and authorization processes. Behavior analytics on the user and real-time threat intelligence play critical roles in anomaly spotting, and sandboxing is able to enforce the isolation of potentially malicious data processing processes. Zero Trust does not stop within a company’s data center; it extends to controlled access and monitoring of data traffic to the cloud, web services, and outsourced IT services.

The concept of Zero Trust garnered considerable interest over the last several years. First coined by the Jericho Forum in 1994, it was later popularized by Forrester under “Zero Trust Architecture.” After Google implemented its own Zero Trust network, BeyondCorp, following the Operation Aurora cyber-attacks in 2009, its adoption took off.

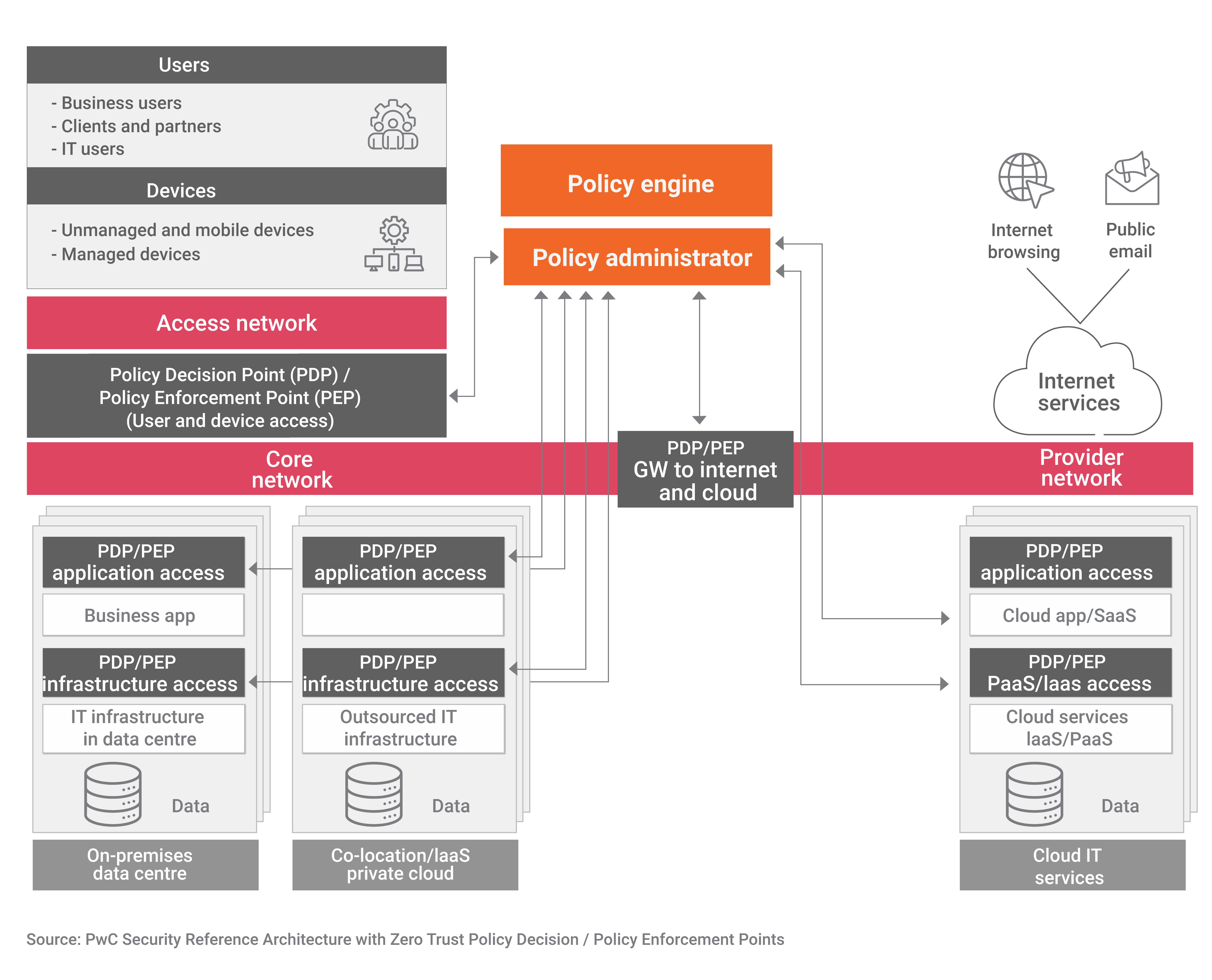

The diagram below shows the primary components of a Zero Trust architecture:

The Zero Trust architecture’s center consists of policy decision points (PDPs) and policy enforcement points (PEPs) within the corporate network. These components allow the creation of data enclaves and microperimeters. PDPs and PEPs act as collection points for security information and as decision hubs for critical decisions regarding transaction path or policy enforcement, such as requiring additional authentication factors or denying untrusted requests.

The Zero Trust architecture’s center consists of policy decision points (PDPs) and policy enforcement points (PEPs) within the corporate network. These components allow the creation of data enclaves and microperimeters. PDPs and PEPs act as collection points for security information and as decision hubs for critical decisions regarding transaction path or policy enforcement, such as requiring additional authentication factors or denying untrusted requests.

The picture illustrates that PDPs and PEPs are not situated only on the external perimeter but strategically places them to segment the network into zones. Based on an organization’s IT infrastructure, the segregation of applications may be allowed between multiple server zones based on different levels of trust. Additional segregation may be done on middle-tier systems between back-end data storage. This ensures a very layered approach to security.

Know the Challenges in Implementing Zero Trust

The road toward implementing a zero-trust security model involves an acute understanding of common challenges that will come into play. Some of these challenges are very intricate infrastructure, budget implications, resource allocation, and the need for adaptive software solutions.

Complex Infrastructure

Organizational infrastructure will vary and is often comprised of many products, from servers and proxies through databases and internal applications to Software-as-a-Service (SaaS). The hybrid nature of these environments, wherein organizations employ a mix of cloud and on-premise setups, puts pressure on the ability to secure each segment adequately.

Additionally, it is challenging to secure legacy hardware and software with modern hardware and applications. This will add another complexity level to attempts to realize zero-trust deployment fully.

Cost and Effort

Zero-trust deployment requires significant time, human resources, and financial investments. Decision-making on network segmentation and access control requires strategic planning and collaborative efforts. Authenticating users and devices before allowing access is yet another layer of complexity.

Ensuring these are executed efficiently often requires significant financial investments, particularly if existing systems do not easily integrate with the desired zero-trust environment.

Flexible Software

When developing a zero-trust network, the software used must be able to adapt to multiple requirements. Integrating micro-segmentation tools, identity-aware proxies, and software-defined perimeter (SDP) will be key.

One cannot implement a zero-trust security model using inflexible software. This would require an organization to purchase multiple systems to implement redundancy, which would complicate the security architecture. Flexible software makes designing and implementing the zero-trust security model much more efficient and effective.

Also Read: Top IT, Cloud, Cybersecurity News Updates: Weekly Highlight

How to Implement Zero-Trust Architecture?

Step 1: Sensitive Data Identification

The first step in implementing the zero-trust model is to systematically identify and discover sensitive data, otherwise referred to as the organization’s ‘crown jewels.’ This is vital as an organization outsources its entire IT to external vendors. It is the organization that needs to protect and comply with data protection and regulatory requirements. Thus, an inventory of the data repositories must be created.

This inventory will form the basis for determining the level of protection and the criticality of data according to both internal factors, such as intellectual property and business value, and external requirements, such as compliance with legal and regulatory requirements. The difference between the various data types must be identified, such as personally identifiable data, payment card industry data, business data, financial statements, and business secrets, including intellectual property and M&A information.

Even though it would be impossible to micro-segment all data, the organization must at least have a micro-segmenting approach to application processing data based on criticality, confidentiality, availability, integrity, and privacy levels. This will enable the creation of data enclaves with segmented sub-perimeters as part of segmentation.

Creating an inventory of all data processing activities of an organization must be a thorough process across the whole IT landscape. This includes creating an inventory of identified and validated data sets and ensuring that data categorization and classification are accurate and data ownership is assigned. At the same time, an inventory of all applications should be carried out, and an analysis of the IT infrastructure and sourcing options should be used.

Important details, such as locations where data is stored, backup, file shares, and other storage facilities, must be meticulously recorded. This will link the data repository’s inventory with the application’s inventory for decisions regarding data processing activities and access permissions. This then forms the basis for sensitive data traffic identification.

Step 2: Determining Sensitive Data

The second step in implementing zero trust architecture involves determining your organization’s sensitive and critical data flows. Identification requires collaboration between IT and business people to understand the complexities of data dependencies, application needs, IT components, data traffic patterns, and access rights.

A good understanding of the business architecture will serve as the base for understanding the types of data being processed across the various departments or corporate functions. Linking with the IT applications in charge of processing this data will help in an overall understanding of the data flow.

A more practical approach to identifying sensitive data flows is the use of swimlanes, which help define the roles of business users involved in processing sensitive data in business processes through IT applications. One needs to define whether these business applications are locally managed and run by the IT unit, outsourced to partners, or provided as cloud services.

This requires good device management practices for various categories: end-use devices, such as unmanaged, BYOD, and mobile devices; network devices; corporate IT infrastructure in data centers; and the inventory of sourced IT services from public or private clouds.

Verifying captured sensitive data flows is a combined effort, with a contextual background from the business side and technical competence from IT. This ensures that the business applications, data repositories identified in Step 1, and relevant devices are included in the analysis of sensitive data traffic.

The sensitive data flows that have been correctly identified now call for the application of suitable measures to protect data at rest, in motion, or in use, thus increasing the overall organization’s security posture.

Step 3: Defining Micro-Perimeters and Data Enclaves

Sub-perimeters, or micro-perimeters, are defined based on the results of steps 1 and 2: the identification of sensitive data and an analysis of data flows within networks, sites, and IT systems. Identifying a repository of sensitive data requires the need-to-know and need-to-do principles supported by the principle of least privilege for access controls.

This boils down to identifying data owners who must understand and define the roles that need access to specific data, approve access from users within those roles, and manage entitlements. User entitlements, however, are a continuous activity that requires frequent changes and auditing to remain effective.

A good user management process defines three types of IT users in terms of business users, who have access to applications that process sensitive data; privileged IT users, who can modify user access rights and security configuration; and clients and partners who require access to sensitive data. Each group of users, perhaps further down, will require a set of controls to manage joiner-mover-leaver processes, user entitlements, and regular recertification cycles.

While user access management is essential, storage and processing resources, and others with similar data protection requirements, can be grouped into ‘enclaves.’ Enclaves, comprising only endpoints given a specified level of trust, can bring policy enforcement together at the PEP. This relieves the load off security enforcement across individual IT systems and applications. A data enclave can be a separate network segment, which allows for stringent control over access to sensitive data.

Step 4: Establishing the Security Policy and Control Framework

After the procedural steps in inventorying sensitive data and establishing the data flow, the next step is establishing a comprehensive security policy and control framework.

The framework defines criticality based on the criteria of confidentiality, availability, integrity, and authenticity, with added criteria for privacy, especially those including PII. Techniques and organizational security controls are defined within this framework in support of the industry’s best practices.

The security policy framework forms the basis of the IT security architecture and ensures policy adherence. As in NIST SP 800-207, this would include the use of policy enforcement points (PEPs) and policy decision points (PDPs) in strategic locations of the network to strictly enforce the policy and take appropriate action in case of policy violation.

The network segments to be considered as basics include:

- PDPs/PEPs for user & device access to restrict only validated and authorized users with proper entitlement to access sensitive data.

- PDPs/PEPs at the application level, to enforce the principle of least privilege and strong application security controls.

- PDPs/PEPs at the IT infrastructure level, with the possibility for the restriction of privileged IT roles and the implementation of the controls for access to IT and to encryption technologies.

- PDPs/PEPs for internet & cloud services, with the restriction of unauthorized access, and with the enforcement of security measures for data protection.

The security policy and control framework applies to the entire IT landscape, from on-premises to outsourced and now to cloud-based environments. Access to IT services and data is based on multi-factor authentication, including but not limited to the user ID, connecting device, location, and services, with the conditional trust check before granting access.

Step 5: Constant Security Monitoring and Intelligent Analysis

The fifth step that would apply the zero trust concept would involve the setting up continuous security monitoring and intelligent analysis to proactively identify and respond to security threats.

All endpoints, gateways—including the PDPs and the PEPs—and sensitive data traffic would need logging and real-time inspection to detect malicious activities. Depending on organizational maturity and size, the monitoring functions might be distributed across various IT divisions. This would pose issues in detecting security incidents.

The key areas for monitoring would include:

- IT operations monitoring assures IT services, network components, and communication links availability.

- Enterprise security monitoring includes all IT infrastructure components powered by a centralized log repository and a security information and event management system that provides event correlation and incident detection.

- Compliance monitoring includes monitoring security configuration baselines, file integrity monitoring, IT asset discovery, vulnerability scanning, and data breach detection.

- The integration of compliance monitoring into enterprise security monitoring would help assure the effectiveness of IT hygiene and security controls. Compliance alerts can then provide key evidence for internal and external audits, further simplifying the audit process and allowing for continuous auditing.

The key difference between enterprise security and compliance monitoring boils down to the time taken to react to alerts and deviations. Security alerts may immediately trigger the security operation center, but compliance monitoring involves verifying IT hygiene and quality aspects to ensure the effectiveness of security controls. For compliance monitoring, this contributes to evidence gathering in the case of audits and hastens the evidence-providing process to auditors. This, in turn, improves the overall security posture.

Step 6: Applying Security Orchestration and Automation

Following the implementation of enterprise security monitoring and compliance monitoring comes the forward move towards security orchestration and automation.

Automated security analytics can speed up the detection and remediation of cyber threats. For instance, after identifying a malicious act, the user can quickly be isolated from the network, thus avoiding any impact on vulnerabilities. Integration with the HR department’s joiner/mover/leaver process allows one to update trusted identities and assigned roles with maximum efficiency and reliability since it automatically updates access controls.

A layer for orchestration is needed to implement automation effectively. This layer will integrate all policy enforcement points with the policy administrator. It will be able to integrate many features, such as threat intelligence feeds or DNS sinkhole information, to increase the identification of policy breaches and suspicious handling of sensitive data.

Various tools and data sources must be integrated for perfect integration. Security Orchestration, Automation, and Response solutions make security operations easy by collecting data from multiple sources and reacting to security events without human interference. The goal is to improve the efficiency of security incident response functions in line with industry standards and best practices.

Finally

In 2024, a mature zero-trust approach will be a holistic and adaptive approach that pulls together the state of the art in technology with strict access management and continuous learning to thwart a perpetually changing threat landscape. This is armed defense from all cyber threats. This would include protecting information, brand reputation, and revenue for businesses.

These insights and predictions are based on the prevailing trends and expert assessments, offering a realistic view of how the business landscape has changed regarding zero trust and will continue to, at least until 2024.

FAQs

1. How much time does it take to implement zero trust?

The implementation duration of zero trust hinges on the chosen solution and the intricacy of your network. Allocating extra time initially to assess the assets requiring protection can expedite the subsequent phases of the process.

2. What are the fundamental elements of zero trust network access (ZTNA)?

ZTNA facilitates controlled access based on identity and context, departing from traditional enterprise perimeters to micro-perimeters around specific resources and their associated data.

By transitioning focus from broad “attack surfaces” to targeted “protect surfaces,” ZTNA customizes access control policies according to the criticality of resources and data sensitivity.

3. How does zero trust help in redefining IT security?

Zero trust redefines IT security by shifting from a perimeter-based model to a model that verifies and secures every user, device, and transaction, regardless of their location within or outside the network.

4. What strategies can organizations employ to initiate the transformation towards Zero Trust security, considering its time-consuming and complex nature?

To initiate the transformation towards Zero Trust security, organizations are advised to begin in a well-understood area of the network, characterized by clear comprehension of data types and data flows. By doing so, they can progressively transform other network segments without causing disruption to their business environment.

[To share your insights with us as part of editorial or sponsored content, please write to sghosh@martechseries.com]